From lies about election fraud to QAnon conspiracy theories and anti-vaccine falsehoods, misinformation is racing through our democracy. And it is dangerous.

Awash in bad information, people have swallowed hydroxychloroquine hoping the drug will protect them against COVID-19 — even with no evidence that it helps (SN Online: 8/2/20). Others refuse to wear masks, contrary to the best public health advice available. In January, protestors disrupted a mass vaccination site in Los Angeles, blocking life-saving shots for hundreds of people. “COVID has opened everyone’s eyes to the dangers of health misinformation,” says cognitive scientist Briony Swire-Thompson of Northeastern University in Boston.

The pandemic has made clear that bad information can kill. And scientists are struggling to stem the tide of misinformation that threatens to drown society. The sheer volume of fake news, flooding across social media with little fact-checking to dam it, is taking an enormous toll on trust in basic institutions. In a December poll of 1,115 U.S. adults, by NPR and the research firm Ipsos, 83 percent said they were concerned about the spread of false information. Yet fewer than half were able to identify as false a QAnon conspiracy theory about pedophilic Satan worshippers trying to control politics and the media.

Scientists have been learning more about why and how people fall for bad information — and what we can do about it. Certain characteristics of social media posts help misinformation spread, new findings show. Other research suggests bad claims can be counteracted by giving accurate information to consumers at just the right time, or by subtly but effectively nudging people to pay attention to the accuracy of what they’re looking at. Such techniques involve small behavior changes that could add up to a significant bulwark against the onslaught of fake news.

In January, protests closed down a mass vaccination site at Dodger Stadium in Los Angeles.Irfan Khan/Los Angeles Times via Getty Images

Wow factor

Misinformation is tough to fight, in part because it spreads for all sorts of reasons. Sometimes it’s bad actors churning out fake-news content in a quest for internet clicks and advertising revenue, as with “troll farms” in Macedonia that generated hoax political stories during the 2016 U.S. presidential election. Other times, the recipients of misinformation are driving its spread.

Some people unwittingly share misinformation on social media and elsewhere simply because they find it surprising or interesting. Another factor is the method through which the misinformation is presented — whether through text, audio or video. Of these, video can be seen as the most credible, according to research by S. Shyam Sundar, an expert on the psychology of messaging at Penn State. He and colleagues decided to study this after a series of murders in India started in 2017 as people circulated via WhatsApp a video purported to be of child abduction. (It was, in reality, a distorted clip of a public awareness campaign video from Pakistan.)

Sundar recently showed 180 participants in India audio, text and video versions of three fake-news stories as WhatsApp messages, with research funding from WhatsApp. The video stories were assessed as the most credible and most likely to be shared by respondents with lower levels of knowledge on the topic of the story. “Seeing is believing,” Sundar says.

The findings, in press at the Journal of Computer-Mediated Communication, suggest several ways to fight fake news, he says. For instance, social media companies could prioritize responding to user complaints when the misinformation being spread includes video, above those that are text-only. And media-literacy efforts might focus on educating people that videos can be highly deceptive. “People should know they are more gullible to misinformation when they see something in video form,” Sundar says. That’s especially important with the rise of deepfake technologies that feature false but visually convincing videos (SN: 9/15/18, p. 12).

One of the most insidious problems with fake news is how easily it lodges itself in our brains and how hard it is to dislodge once it’s there. We’re constantly deluged with information, and our minds use cognitive shortcuts to figure out what to retain and what to let go, says Sara Yeo, a science-communication expert at the University of Utah in Salt Lake City. “Sometimes that information is aligned with the values that we hold, which makes us more likely to accept it,” she says. That means people continually accept information that aligns with what they already believe, further insulating them in self-reinforcing bubbles.

Compounding the problem is that people can process the facts of a message properly while misunderstanding its gist because of the influence of their emotions and values, psychologist Valerie Reyna of Cornell University wrote in 2020 in Proceedings of the National Academy of Sciences.

Thanks to new insights like these, psychologists and cognitive scientists are developing tools people can use to battle misinformation before it arrives — or that prompts them to think more deeply about the information they are consuming.

One such approach is to “prebunk” beforehand rather than debunk after the fact. In 2017, Sander van der Linden, a social psychologist at the University of Cambridge, and colleagues found that presenting information about a petition that denied the reality of climate science following true information about climate change canceled any benefit of receiving the true information. Simply mentioning the misinformation undermined people’s understanding of what was true.

That got van der Linden thinking: Would giving people other relevant information before giving them the misinformation be helpful? In the climate change example, this meant telling people ahead of time that “Charles Darwin” and “members of the Spice Girls” were among the false signatories to the petition. This advance knowledge helped people resist the bad information they were then exposed to and retain the message of the scientific consensus on climate change.

Here’s a very 2021 metaphor: Think of misinformation as a virus, and prebunking as a weakened dose of that virus. Prebunking becomes a vaccine that allows people to build up antibodies to bad information. To broaden this beyond climate change, and to give people tools to recognize and battle misinformation more broadly, van der Linden and colleagues came up with a game, Bad News, to test the effectiveness of prebunking (see Page 36). The results were so promising that the team developed a COVID-19 version of the game, called GO VIRAL! Early results suggest that playing it helps people better recognize pandemic-related misinformation.

Take a breath

Sometimes it doesn’t take very much of an intervention to make a difference. Sometimes it’s just a matter of getting people to stop and think for a moment about what they’re doing, says Gordon Pennycook, a social psychologist at the University of Regina in Canada.

In one 2019 study, Pennycook and David Rand, a cognitive scientist now at MIT, tested real news headlines and partisan fake headlines, such as “Pennsylvania federal court grants legal authority to REMOVE TRUMP after Russian meddling,” with nearly 3,500 participants. The researchers also tested participants’ analytical reasoning skills. People who scored higher on the analytical tests were less likely to identify fake news headlines as accurate, no matter their political affiliation. In other words, lazy thinking rather than political bias may drive people’s susceptibility to fake news, Pennycook and Rand reported in Cognition.

When it comes to COVID-19, however, political polarization does spill over into people’s behavior. In a working paper first posted online April 14, 2020, at PsyArXiv.org, Pennycook and colleagues describe findings that political polarization, especially in the United States with its contrasting media ecosystems, can overwhelm people’s reasoning skills when it comes to taking protective actions, such as wearing masks.

Inattention plays a major role in the spread of misinformation, Pennycook argues. Fortunately, that suggests some simple ways to intervene, to “nudge” the concept of accuracy into people’s minds, helping them resist misinformation. “It’s basically critical thinking training, but in a very light form,” he says. “We have to stop shutting off our brains so much.”

With nearly 5,400 people who previously tweeted links to articles from two sites known for posting misinformation — Breitbart and InfoWars — Pennycook, Rand and colleagues used innocuous-sounding Twitter accounts to send direct messages with a seemingly random question about the accuracy of a nonpolitical news headline. Then the scientists tracked how often the people shared links from sites of high-quality information versus those known for low-quality information, as rated by professional fact-checkers, for the next 24 hours.

On average, people shared higher-quality information after the intervention than before. It’s a simple nudge with simple results, Pennycook acknowledges — but the work, reported online March 17 in Nature, suggests that very basic reminders about accuracy can have a subtle but noticeable effect.

For debunking, timing can be everything. Tagging headlines as “true” or “false” after presenting them helped people remember whether the information was accurate a week later, compared with tagging before or at the moment the information was presented, Nadia Brashier, a cognitive psychologist at Harvard University, reported with Pennycook, Rand and political scientist Adam Berinsky of MIT in February in Proceedings of the National Academy of Sciences.

Prebunking still has value, they note. But providing a quick and simple fact-check after someone reads a headline can be helpful, particularly on social media platforms where people often mindlessly scroll through posts.

Social media companies have taken some steps to fight misinformation spread on their platforms, with mixed results. Twitter’s crowdsourced fact-checking program, Birdwatch, launched as a beta test in January, has already run into trouble with the poor quality of user-flagging. And Facebook has struggled to effectively combat misinformation about COVID-19 vaccines on its platform.

Misinformation researchers have recently called for social media companies to share more of their data so that scientists can better track the spread of online misinformation. Such research can be done without violating users’ privacy, for instance by aggregating information or asking users to actively consent to research studies.

Much of the work to date on misinformation’s spread has used public data from Twitter because it is easily searchable, but platforms such as Facebook have many more users and much more data. Some social media companies do collaborate with outside researchers to study the dynamics of fake news, but much more remains to be done to inoculate the public against false information.

“Ultimately,” van der Linden says, “we’re trying to answer the question: What percentage of the population needs to be vaccinated in order to have herd immunity against misinformation?”

A new treatment could restore some mobility in people paralyzed by strokes

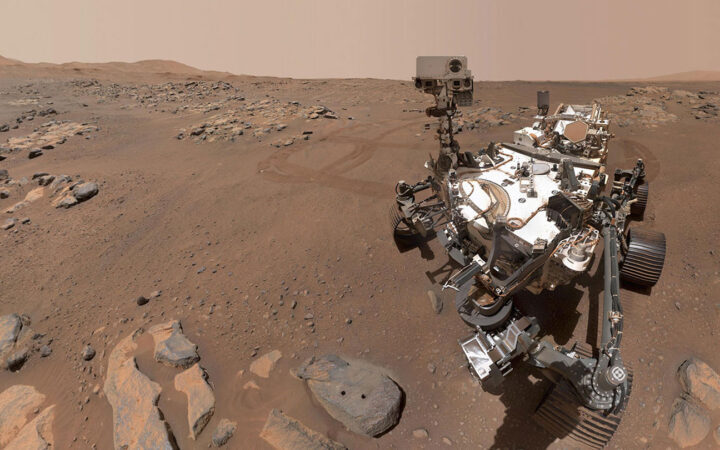

A new treatment could restore some mobility in people paralyzed by strokes  What has Perseverance found in two years on Mars?

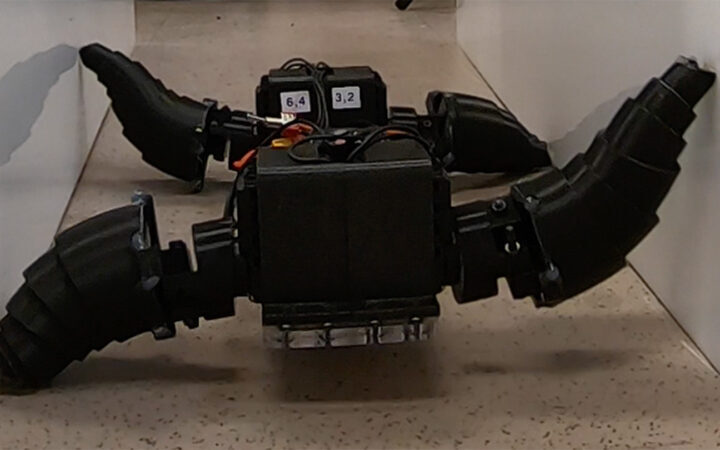

What has Perseverance found in two years on Mars?  This robot automatically tucks its limbs to squeeze through spaces

This robot automatically tucks its limbs to squeeze through spaces  Greta Thunberg’s new book urges the world to take climate action now

Greta Thunberg’s new book urges the world to take climate action now  Glassy eyes may help young crustaceans hide from predators in plain sight

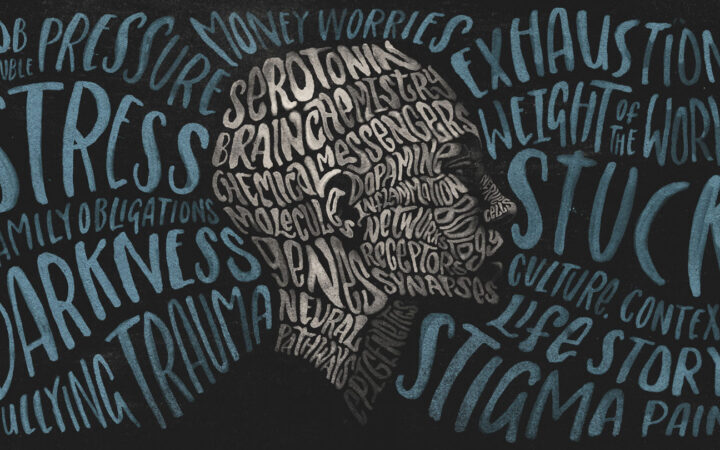

Glassy eyes may help young crustaceans hide from predators in plain sight  A chemical imbalance doesn’t explain depression. So what does?

A chemical imbalance doesn’t explain depression. So what does?