Imagine needing to estimate the total cost of the items in your grocery basket to decide whether to put something back. So you round to the nearest dollar for each potential purchase, using the “round-to-nearest” technique commonly taught in school. That prompts you to round each item’s cost up if the change portion is at least 50 cents and round down if less.

This rounding approach works well for quickly estimating a total without a calculator. And it yields the same results when a particular rounding task is repeated. For instance, rounding 4.9 to the nearest whole number will always yield five and rounding 302 to the nearest hundred will always yield 300.

But this type of rounding can pose problems for calculations in machine learning, quantum computing and other technical applications, says Mantas Mikaitis, a computer scientist at the University of Manchester in England.

“Always rounding to nearest could introduce bias in computations,” Mikaitis says. “Let’s say your data is somehow not uniformly distributed or your rounding errors are not uniformly disturbed. Then you could keep rounding to a certain direction that will then show up in the main result as an error or bias there.”

An alternative technique called stochastic rounding is better suited for applications where the round-to-nearest approach falls short, Mikaitis says. First proposed in 1949 by computer scientist George Elmer Forsythe, stochastic rounding “is currently experiencing a resurgence of interest,” Mikaitis and colleagues write in the March Royal Society Open Science.

This technique isn’t meant to be done in your head. Instead, a computer program rounds to a certain number with probabilities that are based on the distance of the actual measurement from that number. For instance, 2.8 has an 80 percent chance of rounding to three and a 20 percent chance of rounding to two. That’s because it is 80 percent “along the way” to three and 20 percent along the way to two, Mikaitis explains. Alternatively, 2.5 is equally likely to be rounded to two or three.

But the direction that you round for any instance of rounding is random: You can’t predict when 2.5 will be rounded up to three and when it will be rounded down to two, and there’s that 20 percent chance that 2.8 will sometimes be rounded down to two.

By making sure that rounding doesn’t always go in the same direction for a particular number, this process helps guard against what’s known as stagnation. That problem “means that the real result is growing while the computer’s result” isn’t, Mikaitis says. “It’s about losing many tiny measurements that add up to a major loss in the final result.”

Stagnation “is an issue in computing generally,” Mikaitis says, but it poses the biggest problems in applications such as machine learning that often involve adding lots of values, with some of those being much larger than others, (SN: 2/24/22). With the round-to-nearest method, this results in stagnation. But with stochastic rounding, the chance of rounding down, for example, in a series of mostly small numbers that are interrupted by a few large outliers helps guard against those oversized values always dominating the rounding and pushing it up.

Most computers aren’t yet equipped to perform true stochastic rounding, Mikaitis notes. The machines lack random number generators, which are needed to execute the probabilistic decision of which way to round. However, Mikaitis and his colleagues have devised a method to simulate stochastic rounding in these computers by combining the round-to-nearest method with three other types of rounding.

Stochastic rounding’s need for randomness makes it particularly suited to quantum computing applications (SN: 10/4/21). “With quantum computing, you have to measure a result many times and then get an average result, because it’s a noisy result,” Mikaitis says. “You have that randomness in the results already.”

A new treatment could restore some mobility in people paralyzed by strokes

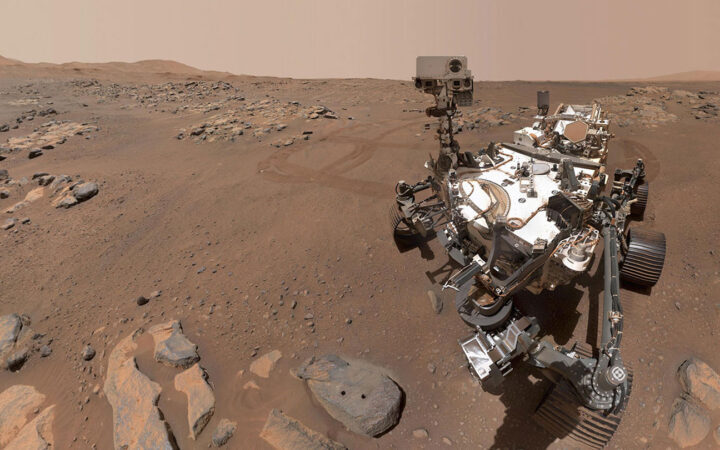

A new treatment could restore some mobility in people paralyzed by strokes  What has Perseverance found in two years on Mars?

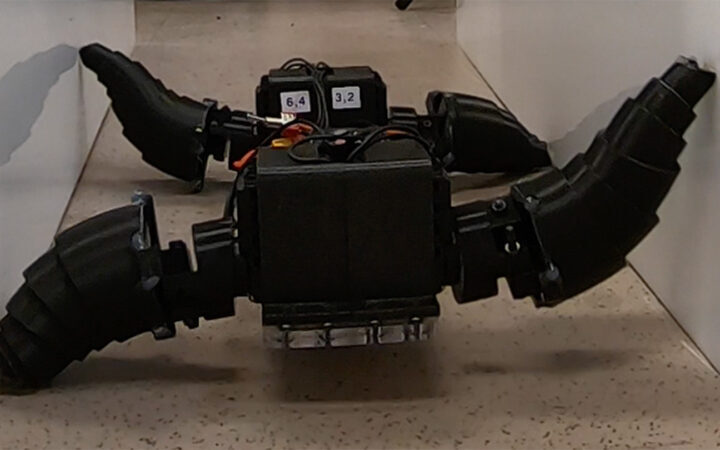

What has Perseverance found in two years on Mars?  This robot automatically tucks its limbs to squeeze through spaces

This robot automatically tucks its limbs to squeeze through spaces  Greta Thunberg’s new book urges the world to take climate action now

Greta Thunberg’s new book urges the world to take climate action now  Glassy eyes may help young crustaceans hide from predators in plain sight

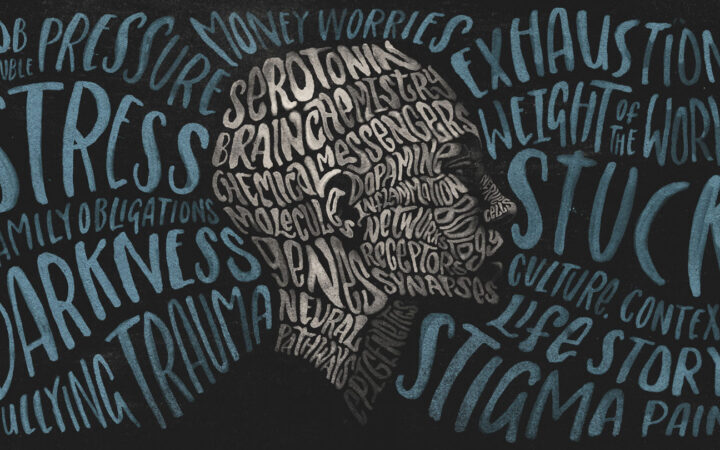

Glassy eyes may help young crustaceans hide from predators in plain sight  A chemical imbalance doesn’t explain depression. So what does?

A chemical imbalance doesn’t explain depression. So what does?